horatio

you’ve heard of sex offenders and sex defenders, now get ready for

sex intenders

sex contenders

sex extenders

sex ascenders

sex suspenders

sex descenders

sexy venders

sex attenders

sex engenders

sexmen slenders

omg the number spamming bot did the thing before it was funny you guys

i might try to make a monospace pixel wingdings font that works as a drop-in replacement for VGA-437

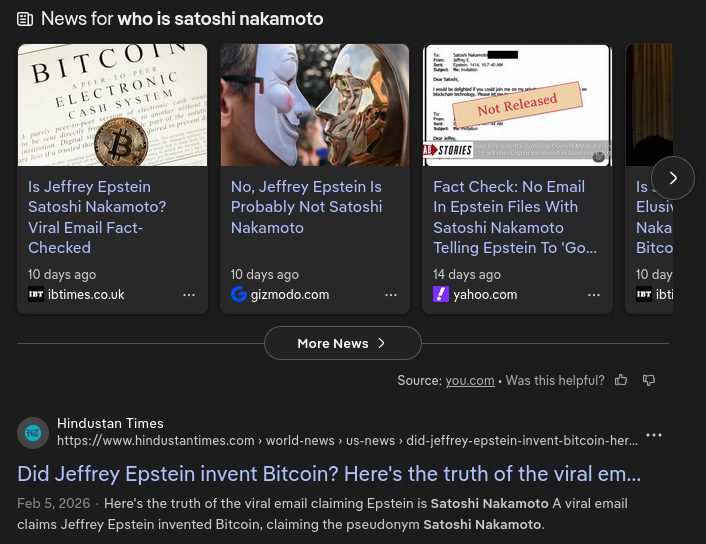

i just looked up “who is Satoshi Nakamoto” (even tho i already knew it was a group pseudonym, just double-checking), and below the duckduckgo chatgpt-wrapper and the wikipedia link is uh

like erm,, what the sigma?

omfg i don’t care that grainger knows what it’s like to be a lineman i don’t even know what a lineman is